This lesson is a continuation of the previous lesson, which introduces some of the applications of the function in creating new, important and practical concepts in the Python programming language. The topic of function in Python does not end with this lesson, and the remaining points are presented in the next lesson.

✔ Level: Medium

Headlines

Lesson 13: Function in Python: Coroutine, Generator, Decorator and Lambda

Decorator

Generator

Continued Coroutine: yield

List Comprehensions

Generator Expressions

lambda and anonymous functions

Decorator

Decorators [PEP 318] are functions that are implemented to wrap other functions or classes. Decorators in Python are very useful tools that allow the programmer to expand their behavior and features by reducing the coding volume without changing the body of their functions and classes. In this section, the focus is on applying Decorators to functions, and we will examine Class Decorator in a class lesson.

To decorate a function by Decorator, a syntax similar to decorator_name @ is used at the top of the header:

def decorator_name(a_function):

pass

@decorator_name

def function_name():

print("Somthing!")

function_name()

The concept that this syntax (decorator_name + @) at the top of the header creates a function for the Python interpreter is quite similar to the syntax below:

wrapper = decorator_name(function_name)

wrapper()

Everything in Python is an object, even complex concepts such as a function; We also remember from the previous lesson that a function in Python is a “first-class” entity, which means that a function can be passed as an argument to other functions like other objects. An example of the above code is the display of sending a function (function_name) to another function (decorator_name).

Consider the example below:

>>> def decorator_name(func):

... def wrapper():

... print("Something is happening before

the function is called.")

... func()

... print("Something is happening after

the function is called.")

... return wrapper

...

>>>

>>> @decorator_name

... def function_name():

... print("Somthing!")

...

>>>

>>> function_name()

Something is happening before the function is called.

Somthing!

Something is happening after the function is called.

>>>

The above code example can also be considered with the following simple structure:

>>> def decorator_name(func):

... def wrapper():

... print("Something is happening before

the function is called.")

... func()

... print("Something is happening after

the function is called.")

... return wrapper

...

>>>

>>> def function_name():

... print("Somthing!")

...

>>>

>>> wrapper = decorator_name(function_name)

>>> wrapper()

Something is happening before the function is called.

Somthing!

Something is happening after the function is called.

>>>

As can be seen by comparing the two examples of code above, Decorators create a wrapper for our functions and classes. When calling the function_name function, the Python interpreter notices its decorator, and instead of executing it, it sends an instance of the object to the specified decorator (decorator_name) and receives and executes a new object specified here with the wrapper function.

In the case of parametric functions, it should also be noted that when calling the desired function and sending the argument to the function, the Python interpreter sends these arguments to the wrapper function of decorator:

More than one Decorator can be applied to its classes and functions, in which case the order in which these Decorators are arranged is important to the Python interpreter:

Arguments can also be sent to Decorators:

@decorator_name(params)

def function_name():

print("Somthing!")

function_name()

In this case, the Python interpreter first sends the argument to the Decorator function and then calls the result with the input argument of the desired function:

temp_decorator = decorator_name(params)

wrapper = temp_decorator(function_name)

wrapper()

Note the example code below:

>>> def formatting(lowerscase=False):

... def formatting_decorator(func):

... def wrapper(text=''):

... if lowerscase:

... func(text.lower())

... else:

... func(text.upper())

... return wrapper

... return formatting_decorator

...

>>>

>>> @formatting(lowerscase=True)

... def chaap(message):

... print(message)

...

>>>

>>> chaap("I Love Python")

i love python

>>>

functools.wraps @

In Python, there is a term called Higher-order functions, which refers to functions that perform operations on other functions or return a new function as output. Accordingly, a module called functools is located in the standard Python library, which provides a series of auxiliary and functional functions for such functions [Python documents]. One of the functions inside this module is wraps.

But why is it important to introduce this function in this section? When we use a Decorator, what happens is that a new function replaces our main function. Note the sample codes below:

When using Decorator, when we wanted to print the function name (__print (f .__ name), the name of the new function (with_logging) was printed, not the original function (f).

Using Decorator always means losing the information about the main function, so we can use the wraps function to prevent this from happening and to preserve the information about our main function. This function is itself a Decorator whose job it is to copy information from the function it receives as an argument to the function to which it is assigned:

>>> from functools import wraps

>>>

>>> def logged(func):

... @wraps(func)

... def with_logging(*args, **kwargs):

... print(func.__name__ + " was called")

... return func(*args, **kwargs)

... return with_logging

...

>>>

>>> @logged

... def f(x):

... """does some math"""

... return x + x * x

...

>>>

>>> print(f.__name__)

f

>>> print(f.__doc__)

does some math

>>>

Please also note the last example of the Decorator discussion. In this example we will calculate the execution time of a function using Decorators [Source]:

>>> import functools

>>> import time

>>>

>>> def timer(func):

... """Print the runtime of the decorated function"""

... @functools.wraps(func)

... def wrapper_timer(*args, **kwargs):

... start_time = time.perf_counter()

... value = func(*args, **kwargs)

... end_time = time.perf_counter()

... run_time = end_time - start_time

... print(f"Finished {func.__name__!r} in

{run_time:.4f} secs")

... return value

... return wrapper_timer

...

>>>

>>> @timer

... def waste_some_time(num_times):

... result = 0

... for _ in range(num_times):

... for i in range(10000)

... result += i**2

...

>>>

>>> waste_some_time(1)

Finished 'waste_some_time' in 0.0072 secs

>>> waste_some_time(999)

Finished 'waste_some_time' in 2.6838 secs

In this example, the perf_counter function is used to calculate time intervals, which is only available from version 3.3 onwards.

If you do not understand the print command code in the wrapper_timer function, refer to lesson 7 of the f-string section [lesson 7 f-string].

Generator

Generators [PEP 255] are functions that are implemented to create a function with behavior similar to iterator objects (iterator – lesson 9).

When a normal function is called, the body of the function is executed to reach a return statement and ends, but by calling a Generator function, the body of the function is not executed but a generator object is returned, which can be done using the __ (next__) method () or () next In Python 2x), it requested its expected values one after the other.

Generator function is lazy [Wikipedia] and does not store data together but only generates it when requested. So when dealing with large data sets, Generators have more efficient memory management, and we also do not have to wait for a sequence to be generated before using it all!

To create a Generator function, it is enough to use one or more yield commands in a normal function. The Python interpreter now returns a generator object when calling such a function, which has the ability to generate a sequence of values (or objects) for use in repetitive applications.

The syntax of the yield statement is similar to the return statement, but with a different application. This command stops the execution of the program at any point in the body of the function, and we can use the __ next__ () method (or (next) in Python 2x) to get the yield value:

>>> def a_generator_function():

... for i in range(3): # i: 0, 1, 2

... yield i*i

... return

...

>>> my_generator = a_generator_function()

# Create a generator

>>>

>>> my_generator.__next__() # Use my_generator.next()

in Python 2.x

0

>>> my_generator.__next__()

1

>>> my_generator.__next__()

4

>>> my_generator.__next__()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIteration

>>>

It should be noted that the end of the Generator function generation process is reported by the StopIteration exception. However, when using commands such as for, this exception is controlled and the loop ends. Rewrite the previous code example as follows:

>>> def a_generator_function():

... for i in range(3): # i: 0, 1, 2

... yield i*i

... return

...

>>>

>>> for i in a_generator_function():

... print(i)

...

0

1

4

>>>

To better understand the function of the Generator function, imagine that you are asked to implement a personal function similar to the Python range () function. What will be your solution? Create an object like a list or an empty tuple and fill it with a loop ?! This solution may be responsible for creating small intervals, but do you have enough memory and time to create a 100 million range? We will solve this problem easily and correctly using the Generator function:

>>> def my_range(stop):

... number = 0

... while number < stop:

... yield number

... number = number + 1

... return

...

>>>

>>> for number in my_range(100000000):

... print(number)

Features of the Generator function

- The Generator function contains one or more yield statements.

- When the Generator function is called, the function is not executed, but instead an object of generator type is returned for that function.

- Using the yield command, we can pause anywhere in the Generator function and get the yield obtained using the __ next__ () or (next) (in Python 2x) method. The first call to the (__ next__) method executes the function until it reaches a yield statement. At this time the yield statement produces a result and the execution of the function stops. By re-calling the __ (next__) method, the execution of the function resumes from the continuation of the same yield statement.

- There is usually no need to use the __ next__ () method directly, and Generator functions are used through commands such as for or functions such as sum (etc.) that have the ability to receive a sequence.

- At the end of generating the Generator functions, they report a StopIteration exception at their stopping point that must be controlled within the program.

- Let’s not forget that using the return command anywhere in the body of the function ends the execution of the function at that point, and the Generator functions are no exception!

- You can turn off a Generator object by calling the close method! Note that a StopIteration exception is reported after this method is called again if the send value ((__ next__)) is requested.

Consider another example code:

>>> def countdown(n):

... print("Counting down from %d" % n)

... while n > 0:

... yield n

... n -= 1

... return

...

>>>

>>> countdown_generator = countdown(10)

>>>

>>> countdown_generator.__next__()

Counting down from 10

10

>>> countdown_generator.__next__()

9

>>> countdown_generator.__next__()

8

>>> countdown_generator.__next__()

7

>>>

>>> countdown_generator.close()

>>>

>>> countdown_generator.__next__()

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIteration

>>>

tip

The Generator object can be converted to a list object using the list () function:

>>> countdown_list = list(countdown(10))

Counting down from 10

>>>

>>> countdown_list

[10, 9, 8, 7, 6, 5, 4, 3, 2, 1]

>>>

Continued Coroutine: yield

New features have been added to the Generator function since Python 2.5 [PEP 342]. If inside a function, we put the yield statement to the right of an assignment = operator, then that function exhibits a different behavior, which in the Python programming language is called Coroutine. Imagine now we can send our desired values to the Generator function !:

>>> def receiver():

... print("Ready to receive")

... while True:

... n = (yield)

... print("Got %s" % n)

...

>>>

>>> receiver_generator = receiver()

>>> receiver_generator.__next__() # python 3.x -

In Python 2.x use .next()

Ready to receive

>>> receiver_generator.send('WooW!!')

Got WooW!!

>>> receiver_generator.send(1)

Got 1

>>> receiver_generator.send(':)')

Got :)

How to run a Coroutine is the same as a Generator, except that the send () method is also available to send the value into the function.

Calling the Coroutine function does not execute the body, but returns a Generator. Object. The __ next__ () method (or (next) in Python 2x) brings the execution of the program to the first yield, at which point the function is suspended and ready to receive the value. The send () method sends the desired value to the function, which is received by the expression (yield) in Coroutine. After receiving the value, the execution of Coroutine continues until the next yield (if any) or the end of the body of the function.

In the Coroutine discussion, Decorators can be used to get rid of the __ next__ () method call:

>>> def coroutine(func):

... def start(*args,**kwargs):

... generator = func(*args,**kwargs)

... generator.__next__()

... return generator

... return start

...

>>>

>>> @coroutine

... def receiver():

... print("Ready to receive")

... while True:

... n = (yield)

... print("Got %s" % n)

...

>>>

>>> receiver_generator = receiver()

>>> receiver_generator.send('Hello World')

# Note : No initial .next()/.__next__() needed

A coroutine can be executed indefinitely unless it is executed by the program by calling the close () method or by itself ending the execution lines of the function.

If the send () method is called after the Coroutine expires, a StopIteration exception will occur:

>>> receiver_generator.close()

>>> receiver_generator.send('value')

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIteration

A Coroutine can generate and return output at the same time as receiving the value:

>>> def line_splitter(delimiter=None):

... print("Ready to split")

... result = None

... while True:

... line = yield result

... result = line.split(delimiter)

...

>>>

>>> splitter = line_splitter(",")

>>>

>>> splitter.__next__() # python 3.x -

In Python 2.x use .next()

Ready to split

>>>

>>> splitter.send("A,B,C")

['A', 'B', 'C']

>>>

>>> splitter.send("100,200,300")

['100', '200', '300']

>>>

What happened?!

The line_splitter function is called with the input value “,”. As we know, at this point, the only thing that happens is to create a generator object instance (and none of the lines inside the function body will be executed). By calling the method () __ splitter .__ next the body of the function is executed to reach the first yield. That is, the phrase “Ready to split” in the print output defines the result variable with the initial value None and finally reaches the line = yield result line by confirming the condition of the command while executing. In this row, based on the evaluation of the expression to the right of the assignment operation, the value of the result variable equal to None is returned outside the function and then the function is suspended. However, it should be noted that the assignment in this line has not been completed yet! Then, by calling the method “splitter.send” (“A, B, C”, the string “A, B, C” is placed in the yield and the execution of the program is suspended and continues. The yield value is assigned to the line and The execution of the line = yield result is complete.In the next line, the string inside the line variable is separated based on the delimiter, which was initially set to “,” and assigned to the result variable (the value of the result variable, which was previously None) With the end of the body lines and the confirmation of the while statement condition again, the body is executed again to reach the yield again, ie to the line line = yield result. Now in the second run of the loop, unlike the first time, the value of the result variable Is not equal to None and its yield or return operation will be visible in the output, ie the value [‘A’, ‘B’, ‘C’] that was generated the first time the loop was executed, will now be displayed in the output Comes and then the function is suspended again (the function waits for one of the methods to send () send or (__ next__) or () close.) The procedure for calling the method (“splitter.send” (100,200, 300 continues like this …

In the case of the line = yield result line, we know that in order to perform the assignment operation, it is necessary to first evaluate the value of the expression on the right and then assign it to the left. That is, the Python interpreter first executes the yield result, the result of which is to return the value of the result variable (in the first run of the loop = None) to the outside of the function, and then the line = yield statement, which assigns the value of the send () method to the line variable.

The topic of Coroutine is broader than the level that can be covered in this lesson, but at this point, David Beazley’s presentation at PyCon’2009 can be useful for more examples, applications, and details on the topic of Coroutine, the Python programming language.

PDF: [A Curious Course on Coroutines and Concurrency]

VIDEO: [YouTube]

List Comprehensions

List Comprehensions is an operation during which a function can be applied to each member of a list object type and the result can be obtained in the form of a new list object type [PEP 202]:

>>> numbers = [1, 2, 3, 4, 5]

>>> squares = [n * n for n in numbers]

>>>

>>> squares

[1, 4, 9, 16, 25]

>>>

The above code sample is equal to:

>>> numbers = [1, 2, 3, 4, 5]

>>> squares = []

>>> for n in numbers:

... squares.append(n * n)

...

>>>

>>> squares

[1, 4, 9, 16, 25]

The general syntax of List Comprehensions is as follows:

[expression for item1 in iterable1 if condition1

for item2 in iterable2 if condition2

...

for itemN in iterableN if conditionN]

# This syntax is roughly equivalent to

the following code:

s = []

for item1 in iterable1:

if condition1:

for item2 in iterable2:

if condition2:

...

for itemN in iterableN:

if conditionN: s.append(expression)

Consider other examples in this regard:

>>> a = [-3,5,2,-10,7,8]

>>> b = 'abc'

>>> [2*s for s in a]

[-6, 10, 4, -20, 14, 16]

>>> [s for s in a if s >= 0]

[5, 2, 7, 8]

>>> [(x,y) for x in a for y in b if x > 0]

[(5, 'a'), (5, 'b'), (5, 'c'), (2, 'a'), (2, 'b'),

(2, 'c'),

(7, 'a'), (7, 'b'), (7, 'c'), (8, 'a'), (8, 'b'),

(8, 'c')]

>>> import math

>>> c = [(1,2), (3,4), (5,6)]

>>> [math.sqrt(x*x+y*y) for x,y in c]

[2.23606797749979, 5.0, 7.810249675906654]

Note that if the result of List Comprehensions is more than one member at a time, the result values must be enclosed in parentheses (as a tuple object).

Consider the example [x, y) for x in a for y in b if x> 0)] and its output. Given this, the following statement is incorrect in the opinion of the Python interpreter:

>>> [x,y for x in a for y in b]

File "<stdin>", line 1

[x,y for x in a for y in b]

^

SyntaxError: invalid syntax

>>>

Another important point remains. Note the example of the code below in the two versions of Python 3x and 2x:

Both codes are the same, but in version 2x, because the iteration variables defined – here x – are not considered in a separate scope, by changing their value inside the expression, the value of the same name in the outer field of the expression also changes. It will be given. According to Mr. Rossom, “dirty little secret” has been fixed in version 3x. [more details]

Generator Expressions

The function of Generator Expressions is similar to List Comprehensions, but with the property of a Generator object, and to create it, it is enough to use parentheses () instead of brackets [] in List Comprehensions. [PEP 289]:

>>> a = [1, 2, 3, 4]

>>> b = (10*i for i in a)

>>>

>>>

>>> b

<generator object <genexpr> at 0x7f488703aca8>

>>>

>>> b.__next__() # python 3.x - In Python 2.x use

.next()

10

>>> b.__next__() # python 3.x - In Python 2.x use

.next()

20

>>>

Understanding the difference between Generator Expressions and List Comprehensions is very important. The output of a List Comprehensions is exactly the result of performing operations in the form of a list object, while the output of a Generation Expressions is an object that knows how to produce results step by step. Understanding such issues will play an important role in increasing program performance and memory consumption.

By running the sample code below; Out of all the rows in the The_Zen_of_Python.txt file, the rhyming comments are printed in Python:

In the first row, the The_Zen_of_Python.txt file is opened, and in the second row, a Generator object is obtained to access and strip them (remove possible space characters at the beginning and end of the line text) using Generator Expressions. Note that the lines of the file have not been read yet, and only the ability to request and navigate line by line is created. In the third line, by creating another Generator object (again in the Generator Expressions method), we have the ability to filter comment-like lines inside the file with the help of the lines object of the previous step. But the rows of the file have not been read yet because a production request has not yet been sent to either of the generator objects (lines and comments). Finally, in the fourth line, the for loop command executes the comments object, and this object also executes the lines object based on the operations defined for it.

The_Zen_of_Python.txt file used in this example is very small, but you can see the effect of using Generator Expressions in this example by extracting comments from a multi-gigabyte file!

tip

The Generator object created in the Generator Expressions method can also be converted to a list object using the list () function:

>>> comment_list = list(comments)

>>> comment_list

['# File Name: The_Zen_of_Python.txt',

'# The Zen of Python',

'# PEP 20: https://www.python.org/dev/peps/pep-0020']

lambda and anonymous functions

In the Python programming language, Anonymous functions or Lambda functions are functions that can have any number of arguments, but their body must contain only one expression. The lambda keyword is used to construct these functions. The structural pattern of this type of function is as follows:

lambda args : expression

In this template, args represents any number of arguments separated by commas (,), and the expression represents only one Python expression that does not contain statements such as for or while.

Consider the following function as an example:

>>> def a_function(x, y):

... return x + y

...

>>>

>>> a_function(2, 3)

5

This function will be in anonymous form as follows:

>>> a_function = lambda x,y : x+y

>>> a_function(2, 3)

5

Or:

>>> (lambda x,y: x+y)(2, 3)

5

Where is the main application of Lambda functions?

These functions are mostly used when we want to pass a short function as an argument to another function.

For example, in Lesson 8, we remember that the sort () method was used to sort the members of a list object, and it was stated that the sort () method has an optional argument called key, which can be passed by passing a single-argument function to it. Each member of the list did this before comparing and sorting (for example: capitalizing):

>>> L = ['a', 'D', 'c', 'B', 'e', 'f', 'G', 'h']

>>> L.sort()

>>> L

['B', 'D', 'G', 'a', 'c', 'e', 'f', 'h']

>>> L.sort(key=lambda n: n.lower())

>>> L

['a', 'B', 'c', 'D', 'e', 'f', 'G', 'h']

>>>

😊 I hope it was useful

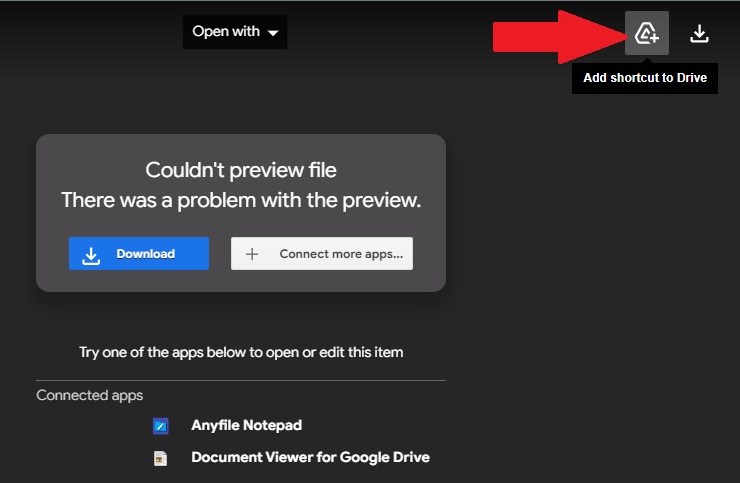

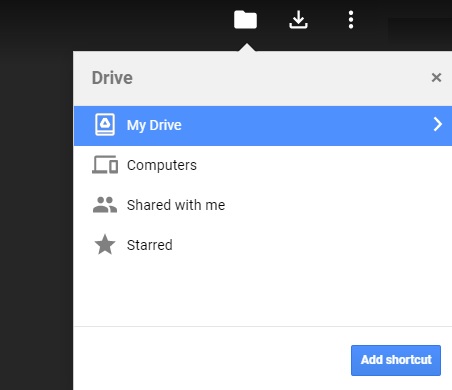

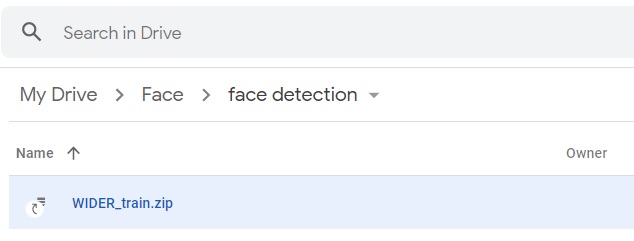

![]() shows that it is a shortcut.

shows that it is a shortcut.